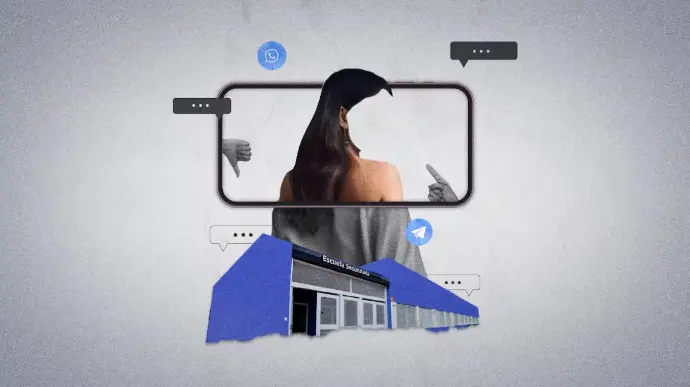

Deepfake in schools: cases of students creating sexual photos of classmates with AI and going viral are on the rise

SPECIAL REPORT

Hundreds of photos. Marketed through Discord, Instagram, Telegram. Nude, in underwear, or swimsuits. With the advancement of technology, the risks also increase, and recently, schools are more alert than ever. During 2024, at least a dozen cases were reported across the country where a student or group of students used photos of their classmates and edited them with Artificial Intelligence to undress them.

The faces are those of the affected girls, the bodies are created by the editing platform. And together they form an erotic image of a teenager. The viralization, in just hours, can be endless, as can the consequences.

There, too, begins an ordeal. Dozens of girls are affected, violated—despite the photo being edited—with complexes, self-esteem, and psychological issues. School transfers end up being a mandatory solution to prevent further suffering for the teenagers, but which leave a taste of unfairness.

The keys to better understanding how this phenomenon grew over time, the consequences, and how to address the issues with teenagers.

The Judicial Perspective: The Three Types of Deepfakes, the Responsibility of Apps, and How They Are Investigated

The dissemination of child sexual abuse material is a problem that has grown exponentially in recent years. The added danger is the emergence of Artificial Intelligence as a tool for this crime. What was previously merely disseminated is now being created and disseminated.

Global reports confirm the growth of this activity: the latest report from Europol (the European Police Office) indicates that artificial intelligence is increasingly used to produce child sexual exploitation material and "makes the identification of victims and perpetrators more complicated."

A survey by the Internet Watch Foundation (IWF) indicated that, since the incorporation of Artificial Intelligence to manipulate images of minors, there has been a 360% increase in sexual photos published online of children between the ages of 7 and 10.

The prosecutor indicated that one of the dangers posed by artificial intelligence is that “even a harmless video of a child can be transformed into images of child sexual abuse.”

In this regard, he noted: “AI poses an unprecedented challenge in the fight against child exploitation material. While this technology has great potential, it is crucial to develop mechanisms to prevent its use for illicit purposes and protect children.”

Therefore, he highlighted the main reasons why AI amplifies this problem.

Generation of Hyperrealistic Images: This makes it possible to generate sexual exploitation material featuring children who have never existed, making it difficult to detect and eliminate.

Amplification of Production: This aspect saturates networks, hampering detection efforts.

Rapid Diffusion: This facilitates the rapid distribution of this material over the internet and makes it more accessible to consumers. Difficulty in identification: The high quality of AI-generated images makes it difficult to distinguish them from real images, complicating the identification and prosecution of those responsible.

The three types of deepfakes

The Budapest Convention on Cybercrime, in its Article 9, established the three types of productions that exist for these cases:

Virtual material: These are images of minors that are completely fictitious, both the body and the face. This type of case does not involve real children.

Technical production: This type of case involves adults who appear to be minors through various methods, such as image retouching with digital tools.

Artificial production: This refers to the representation of children or adolescents through drawings or other forms of animation, such as Japanese cartoons known as "anime."

How school deepfakes are investigated and what legal consequences they may have

The Cybercrime Prosecutor of the UFI No. 22 of Azul, Lucas Moyano, explained to TN the step-by-step process of an investigation related to school deepfakes.

Moyano explained that the first measure they take is related to containment: “We don't just think about the victim, but also the entire family. When this type of case occurs, it's an attack on the family itself. Parents often don't know how to help their children.”

The second step is to move forward with the investigation and preserve the victim's digital integrity. “It's key to know if they know of the existence of other victims in order to make progress in detecting the perpetrator.”

For investigators, it's crucial that the victims or their families know whether the images were shared on platforms or media, or if there's any kind of CBU or CVU. "That's when we begin to unravel the traces left by the crime," he noted.

"It's important to preserve the evidence and verify how they learned about the situation. From there, a prompt report is key," the cybercrime specialist explained about the investigation.

In that sense, he added: "We have to look at each case individually. In some of the deepfakes, the creator of the content was contacted because they were the one sharing the images. And then we have to retrace the chain. Through judicial practice, responsibility can be determined."

The story of the mother of a deepfake victim

Regarding the crime that constitutes this type of case, Moyano explained that progress can be made through both criminal and civil proceedings, with the aggravating factors that it could be a case of gender-based violence and violation of personal data.

The prosecutor explained that, from a criminal perspective, there are two possible approaches. “The first is Article 128. If you create a montage with a minor's face in content with sexual characteristics, it would constitute a crime.”

Secondly, he referred to the role of the Olimpia Law on digital violence: “It can be seen as a way to attack women to make them suffer. It is a specific law that addresses this and also expands gender-based violence.”

For the prosecutor, cases where students create sexual images of their classmates should be framed within a context of gender-based violence “due to the position of the accused toward the victims and the sexual objectification to which they were subjected.”

It is important to preserve the evidence and verify how they learned of the situation. From there, a prompt complaint is key.

Law 26.485 in Argentina for the Protection of Violence against Women establishes the type of "digital or telematic violence for conduct that affects the victim's reputation" and expressly addresses the case of "dissemination, without consent, of real or edited digital material, intimate or nude, attributed to women."

In civil terms, Moyano referred to cases where images of male or female students are used for sale through social media such as Telegram. "There may be a civil action regarding damages related to image protection," she explained.

"Many times these images can be used to generate bullying. Parents are responsible for the actions of minors, so they may be held liable. And if this type of practice is established in the school environment for bullying, we can even talk about the school's liability," she explained.

The crime carries a sentence of up to six years if the accused or guilty party is over 16 years old. If the accused is a minor, the crime falls under the Juvenile Criminal System, and the victim can only pursue civil action to compensate for financial damages.

App Liability in School Deepfakes

Beneath the calls for progress in raising awareness about the responsible use of Artificial Intelligence, there is a point that is rarely discussed: the liability of the apps that generate these photos.

The vast majority of social media platforms have specific algorithms that can detect when some type of child sexual abuse material is being disseminated or shared. However, these algorithms are not implemented in apps that generate images with artificial intelligence.

“It would be necessary for algorithms to be able to detect when child sexual abuse content is being created or to hold the app that generated that content civilly liable,” stated prosecutor Lucas Moyano.

In this regard, he added: “There must be responsible use of the digital environment. Legal applications are advancing by leaps and bounds, and legal regulation always lags behind. We need to be close to such a dangerous tool.”

To combat this, Moyano proposed four key measures:

Dissemination of Images Without Consent: Sexual Violence

Images shared without consent, whether real or fictitious, created with AI, do not ignore the violence they conceal. Regarding this, Soledad Fuster, a psychologist specializing in digital violence, emphasized: “Sexual violence is violence that deprives a person of the right to make decisions about their own body.”

“This impacts not only the digital environment, which goes viral quickly due to dissemination among acquaintances, but also the viralization of thousands of people in just a few days,” she added.

This, as the professional explained:

Attacks people's privacy and intimacy

Violates rights.

It has a psychological, emotional, sexual, and behavioral impact.

It impacts the physical and digital environments where the person carries out their activities.

These situations cause victims to not want to go to school from then on or stop frequenting common places with the people around them. “It has medium- and long-term effects. Socially, it affects trust in people, relationships, and fear. It causes girls to feel affected again and again, whether because a photo was edited and they were undressed, or because they freely decided to share a photo with their consent and then it was disseminated without their consent. Every time someone sees their photo or video, they are undressed again without their approval,” Fuster added.

She also emphasized something fundamental: this isn't just about digital violence, but rather about real consequences, impacting the victims in reality. “The only thing that's digital is the medium used to perpetrate violence,” she emphasized.

To begin with, Fuster indicated that work with adolescents must begin with prevention and awareness-raising within the educational community itself, and to achieve this, they must be trained and have the tools to help detect these cases. “What happens in digital environments, whether it happens inside or outside of school, impacts students. The virtual world isn't just about being inside or outside of school; it's about where the child is,” she insisted.

She then explained that prevention must be addressed by taking ESI from a digital perspective. “It's necessary to work with families, provide them with information, share news, and guides, whether through workshops with families or by sharing information with them. Coordinated work is essential, always focusing on ESI as a cross-cutting theme.”

Behind this is ongoing work with students in schools, helping to raise awareness about the psychological impact that digital violence has on victims. This also includes providing them with tools so that, if they learn of this situation, they can act to protect the person being attacked. “We must stop this viralization. If it's already gone viral, stop the content from circulating, without liking, opening, sharing, or commenting. We must report the content, delete it, and ask the rest of our classmates to do the same, based on shared responsibility. It goes viral precisely because one person shares it, and then one after another continues to participate in its dissemination,” Fuster noted.

Since the emergence of Artificial Intelligence, there has been a 360% increase in sexual photos of children between 7 and 10 years old posted online. Source: Internet Watch Foundation (IWF) survey.

On the other hand, she called for working with the person who edited this content and initially disseminated it. “Promote reflection. This is what we must aim for, so that whoever caused this situation can understand the consequences of what they did. We understand the context, and from there we must intervene, promote critical thinking to evaluate their actions, talk about empathy so they can see how the affected girl may be feeling, provide tools to work from home, and, on the other hand, work with the child from the educational institution, taking disciplinary measures, always with pedagogical purposes,” Fuster added.

In addition to disciplinary measures, the psychologist stated that emphasis must be placed on whoever created or disseminated the material: that they must also actively participate in repairing the damage caused, not only raising awareness, but being part of the reparation process. “Each institution can consider an intervention: produce a written work aimed at preventing these situations of violence, interview a leading expert in digital violence. If the student had the intention and the context was appropriate, they can apologize.”

On the other hand, she referred to the "co-responsibility" aspect. "It's necessary to work with the group because there was one who edited the content, another who shared it, others who spread it, and millions who made the post go viral in just a few hours or minutes. This has to do with the fact that a million people were giving this content greater visibility on the internet. We need to work on co-responsibility to prevent it from happening again," she noted.

Read also: What are OWC relationships and why more and more couples are choosing to see each other only on weekends

Regarding the victims, Fuster stated: "We must protect those affected, listen to them, and intervene based on their needs." "We protect the affected girl and, following the Ministry of Education's protocol for dealing with situations of digital violence, we establish disciplinary measures and strategies for restorative measures, including the possibility of changing the student's course or shift, always guaranteeing school continuity," she added.

“Violence is violence, whether physical, verbal, or digital, because it impacts and harms those who suffer it. It is our responsibility to safeguard the rights of students,” the psychologist added. “It is important for schools to move away from an adult-centric approach when examining the facts and interpreting their impact. Many adults still think that if it's virtual, it's not that serious. They continue to contrast virtuality with reality, saying, ‘Just block it and that's it,’ minimizing and making invisible the suffering of so many children.”

“Often it's not just about closing the social media profile they had, but it impacts their entire lives. We need to provide information to families, and work on everyday issues within the institution. Banning isn't a strategy with adolescents: it's about educating, encouraging reflection, and seeking shared responsibility. But it's very difficult when content is circulating. It's hard to put words to the situations that are occurring, and when you do, you see mocking sniggers and glances among many students who remember the content they saw and it continues to circulate,” he said.

In closing, she insisted: “Adults, in one way or another, are always arriving late. Protocols emerge when situations are already occurring, and they prevent worse damage, but due to a lack of information and awareness, as a society, we are arriving late.”

Deepfake in Schools: What Are the Protocols in Schools?

At the end of last year, the City of Buenos Aires implemented a protocol to intervene in cases of cyberbullying or the dissemination of intimate content about students. This protocol must be implemented in both public and private schools.

The Buenos Aires government explained to TN that once they become aware of an alleged episode of digital violence—such as a deepfake in schools—they suggest implementing Law 223, which consists of interviewing the students involved to begin the process of addressing the problem.

In response to such a situation, the City of Buenos Aires clarified that "the student affected is not being separated, but rather the person who committed the act." They warned that "the necessary curricular adaptations must be guaranteed" so that students can continue attending classes.

They also detailed that the workshops held for students, families, and school teams "include specific content on the risks of deepfakes and strategies for identifying and reporting them."

“Restrictions on the use of platforms and websites within the educational environment are regularly updated to minimize access to tools that could facilitate the creation and distribution of deepfakes,” the City Government added.

The Digital Education Department of the Directorate of Teacher Training acknowledged to TN that school deepfakes are an “emerging phenomenon” and warned of the importance of “promoting safe educational environments” in which initiatives aimed at “training, research, and regulation of the use of technologies” are developed.

The City Government announced that in 2025, a commission composed of digital education specialists will be formed to “design strategies to promote digital skills in teachers and digital citizenship in students, addressing topics such as ethics in the use of AI.”

Artificial Intelligence: Adolescence and Family Intervention

Given the advances of Artificial Intelligence, the limited control and freedoms adolescents acquire, psychologist Mariana Collomb, specializing in motherhood, parenting, and family, explained: “The use of AI at such an early age is complex, and there are no studies on how it will influence the future. It complicates brain connections and existence; it's not natural; it's false, created by technology.”

For the specialist, the misuse of AI in creating photos that could affect others can harm adolescents' overall well-being:

Comparing themselves to a false image creates body dysmorphia.

Mental disorders due to a real or imagined defect.

It transforms into an obsessive idea driven by the search for perception.

This, in turn, generates side effects regarding their relationship with their surroundings:

They avoid social relationships.

They feel exposed and uncomfortable.

Another person's invasion of their privacy exposes them.

From a very early age, young people begin sharing intimate images. This requires prior self-care. “What effect will it have if it's not kept private between two people, if it's shared without consent?” Collomb asks.

As a solution, the psychologist proposes more family involvement and Comprehensive Sexual Education (CSE) as a fundamental basis to help establish limits. “Self-care and that of others. Awareness about sharing personal things with society, bonds, and the viralization of images,” she expressed.

Regarding the inappropriate use of technology at an early age, the professional stated: “A mental health epidemic is expected due to everything it can affect young people: developmental delays, frustration tolerance due to the search for immediacy, anxiety, depression, body dysmorphia, anxiety over likes and acceptance.”

“It's more common among younger children due to hormonal factors, because they search for sexual content online and aren't yet developed or mature enough to understand what they're seeing. This also affects the way they relate to their peers, the way they relate sexually, because what appears in pornography isn't real,” he added.

“The violation of that privacy, even though the image isn't real, interferes with well-being and violates ethics and morals.”

In this regard, she clarified that before the age of 13, children aren't mature enough to understand the difference between reality and fiction, and that's where pornography comes into play.

"Both the victim and the perpetrator will, in some way, want to change schools afterward to start over. The victim is embarrassed by facing classmates, not seeing the aggressor, which generates rejection, criticism, and stares, and the aggressor is sometimes isolated by his environment for what he did," she added.

Regarding this issue, Fuster added: “It appears most frequently between the ages of 11 and 15, as a source of curiosity, the beginning of exploration. It has to do with the immaturity of thinking about the consequences, but it's not a coincidence that this is the age at which families start leaving children with their cell phones, either to avoid intruding or because they don't understand what they're doing. Adolescents begin to gain autonomy and freedom, begin to go to school alone, and begin to use their devices independently, losing the adult perspective. We need to take an interest in what children do in physical and digital environments.”

“Promoting children's autonomy doesn't mean leaving them alone. There are families who shirk their parenting responsibilities. As adults, we need to continue taking on the caregiving role, establishing guidelines within the home, and promoting empathy, but also the need to consider how the other person might be experiencing it, what they might need, regardless of what I might feel. Listening to the children when they talk about their suffering with empathy,” she concluded.

First-person accounts

Virginia is the mother of a student at the Compañía de María Institute in the Buenos Aires neighborhood of Colegiales. At that institution, three students were accused of editing at least 400 photos using Artificial Intelligence (AI) with the faces of their classmates, girls from other classes, graduates, and even teachers, and merging them with naked bodies.

In this particular case, the case was divided into two parts: parents who had proof of the editing and went to court, and those who were alerted by the school about what happened but didn't have the photo.

"It all started with a group of second- and third-grade boys who were getting together to play PlayStation. They were using a program that limited the number of photos they could edit, so they opened different accounts and took more than 400 photos of students, former students, and teachers," the mother of one of the victims told TN.

The woman claims that when the parents approached the school to receive a response from the authorities, many had evidence, namely photos with the students' faces and edited bodies. "Others were notified by the school when the boys themselves named the girls," she explained.

"The school was very bad. They couldn't resolve it in a more normal, common-sense way within the institution. They involved people from the Public Prosecutor's Office, but they couldn't do anything. After the winter break, we managed to get the only person involved who was in my daughter's class removed from school, not by their decision, but by the Ministry's decision, because the boy was having a bad time," she clarified.

The rest was chaotic. The complainant's daughter started attending another school this year. A classmate also decided to leave the institution. “For us, the whole year was quite exhausting. It was difficult to get a spot at another school. My daughter didn't want to return. We had to do a lot of work so she could finish the year there because she didn't have much time left,” she concluded.

Read the full article: Students at a Catholic school doctored and viralized more than 400 nude photos of classmates and teachers

She was unable to pursue the claim, but the parents, who provided proof of the photo, continued to pursue the case through legal proceedings.

In October of last year, another case came to light, this time at a private school in San Martín. There, a 15-year-old student was accused of creating and selling fake images of his nude classmates using Artificial Intelligence (AI).

The complaint was filed in August by a group of parents of teenagers attending Colegio San Agustiniano. They discovered the case through another classmate, who posed as a buyer and obtained the CBU (ID) and photo of the student making the sales, thus confirming his identity.

The student used the Discord platform to create groups, inviting other students to join a private chat. There, he shared photos and videos of girls, many of whom were his classmates. But these images were fake, as he manipulated them using AI to "undress" them.

According to the complaint, the group reached more than 8,000 members, both minors and adults.

Florencia, the mother of one of the victims, explained to TN how they were able to uncover the case: "A classmate of his bought a folder and saw everything. He showed us the evidence, and with that, we filed a complaint." She also explained that the boy took profile photos of the students and used only their faces, but the rest of their bodies were not theirs.

According to her, there were at least 22 victims of this crime, but she asserts that more from other schools were also affected. "When I joined the group, there were images of many teenagers, and the users were both adults and minors," she said.

She also said that the emotional impact on the victims was immediate: the teenager felt ashamed and didn't want to return to school.

La Plata was another city that experienced a scandal related to this issue. In May 2024, a 13-year-old boy, a student at San Cayetano School, doctored photos of his classmates, added naked women's bodies, and made them go viral. The images spread throughout the institution, and the parents of the affected girls filed a complaint.

According to sources close to TN, the case came to light when the affected students, aged between 13 and 15, reported that they received the doctored images. “They were crying inconsolably, and one of them said that a classmate had altered her photos, had shown them the bodies of naked women, and had made them go viral,” they stated.

From then on, everything was chaos within the institution because “the images seemed real.” It was also learned that at that time, when the classmates found out what had happened, they tried to attack the accused, and the boy's parents had to remove him from the school.

Furthermore, the student was reprimanded and “invited to leave” the institution, even though the incident took place off-campus. It was learned that the boy who shared the photos was a second-year student, while some of the students involved were his classmates, and others were third-year students.

These are some of the cases reported in the City of Buenos Aires and its provinces. However, similar incidents have also occurred throughout the country: in Córdoba, Entre Ríos, Chaco, Río Negro, Santa Fe, and even at a University in San Juan.

Apps and Platforms That Nude Photos with Artificial Intelligence

The internet is as vast as it is dangerous. Today, the reach of apps and platforms that nude photos with Artificial Intelligence is varied, and although many have different requirements, accessing them is not difficult at all.

They are used not only to nude an image, but also to create "virtual girlfriends" with whom one can interact.

These tools use machine learning algorithms and neural networks to create a wide variety of content, including NSFW images.

An analysis conducted by this outlet of 17 platforms found that all have more than 3 million active users, and several reach 4 million. Some offer free options and others charge fees ranging from $3 to $25.

IHRO NEWS

IHRO NEWS